EFK stackĪs an example, I’m going to use the EFK – ElasticSearch, Fluentd, Kibana – stack. When you set up a DaemonSet – which is very similar to a normal Deployment -, Kubernetes makes sure that an instance is going to be deployed to every (selectively some) cluster node, so we’re going to use Fluentd with a DaemonSet. Luckily, Kubernetes provides a feature like this, it’s called DaemonSet. The only thing left is to figure out a way to deploy the agent to every K8S node. When you have an agent application running on every cluster-node, you can easily set up some watching for those log files and when something new is coming up, like a new log line, you can simply collect it.įluentd is one agent that can work this way. Since a pod consists of Docker containers, those containers are going to be scheduled on a concrete K8S node, hence its logs are going to be stored on the node’s filesystem. When you are logging from a container to standard out/error, Docker is simply going to store those logs on the filesystem in specific folders. So the basic idea in this case is to utilize the Docker engine under Kubernetes. I’m going to cover the 3rd option, having a dedicated agent running on the cluster nodes. deploy a log collector agent to each cluster node to collect the logs.have the application push the logs into the logging service directly.There are different ways to collect logs from a Kubernetes cluster.

#Spring boot docker syslog how to

So, in this article I’m going to cover how to set up an EFK stack on Kubernetes with an example Java based Spring Boot application to support multiline log messages.

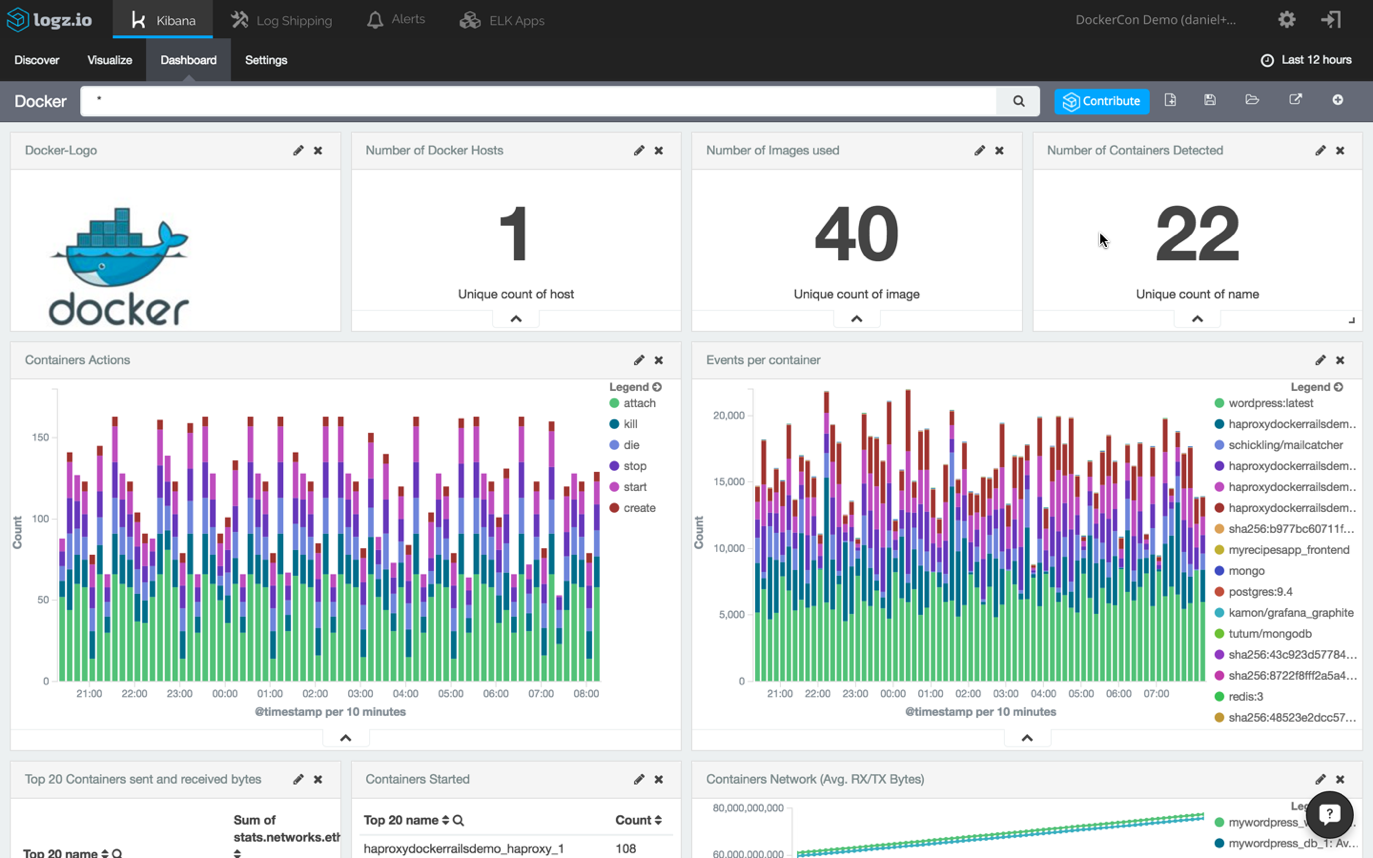

Single lines out of an exception trace doesn’t give an engineer much value during an investigation. But any engineer who has seen a single exception already, knows that a stacktrace is a multiline log message. So where’s the catch?įor normal, single lined log messages, this is going to work flawlessly. As soon as you set up the EFK – ElasticSearch, Fluentd, Kibana – stack, you can kick-start the project quickly because logs are going to flow in and Kibana will visualize it for you. You just need a log collector, let’s use Fluentd. You want to have Kibana as a visualization tool for your logs. One problem I see with the basic, tutorial based setups is the fact that they are just starting points and often the service is running with its default settings.įor example, let’s say you have an application running on Kubernetes. However, adapting it to the application environment is just as critical as having it.

Think about having a bug in production and having no logging infra? How can the engineers figure out what’s the problem? Well, it’s not impossible, it’s just very difficult and time-consuming. Drawing the line between the 2 is very difficult and might depend on the application specifics but as a general rule, I think no application and its development team can operate efficiently if the logging infrastructure is not there. The monitoring and logging services are crucial, especially for a cloud environment and for a microservice architecture.

#Spring boot docker syslog software

If the infrastructure is not supporting the application use-cases or the software development practices, it isn’t a good enough base for growth. For a well-functioning application development team, it’s important to have the appropriate infrastructure behind, as a structured foundation.

0 kommentar(er)

0 kommentar(er)